Introduction to Vector Databases: input, more input!

Published on 🤖 If you want your AI to read this post, download it as markdown.

I've been working for a few days with my colleague Olivier on an AI project. Our idea is to create a tool that automates documentation, refactoring, and test writing tasks for a repository’s code. As of today, we are not entirely sure if, from an operational standpoint, the effort is worth it, mainly because we’ve seen that there are services specifically developed for this, such as Cursor or Pythagora. However, what is certain is that we are learning a lot—besides losing a few hours of sleep… 😴

When we first approach a new technology, we often feel overwhelmed by a torrent of new concepts and tools. In the case of AI-driven software development, this initial flood brings terms like LLMs, vector databases, retrievers, RAGs, AI agents, sentence transformers, etc.

In this post, I will focus on vector databases (the title doesn’t lie). They are one of the key components in this field, and also, the best way to truly understand a concept is to be able to explain it simply. If this helps you as well, it means I haven’t misunderstood them completely. 😊

Vectors

To understand what vector databases are and how they work, we first need to understand what a vector is.

In the field of machine learning, a vector is an ordered list of numbers representing data of any kind, including unstructured data such as text documents, images, audio, or videos.

The number of values in a vector is known as its dimension, and vector databases handle vectors with several hundred dimensions. For example, OpenAI's text-embedding-3-small model generates vectors with 1,536 dimensions.

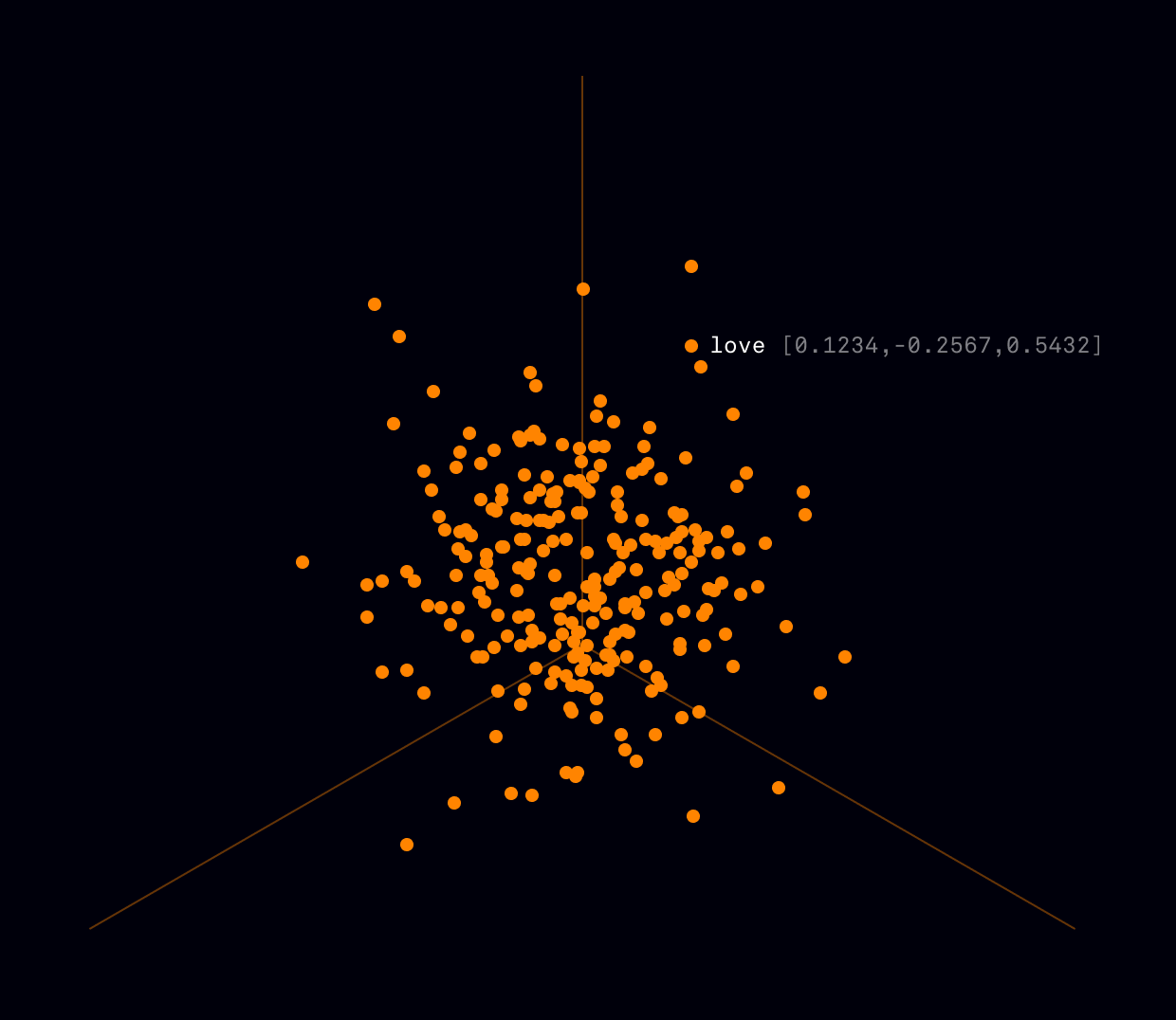

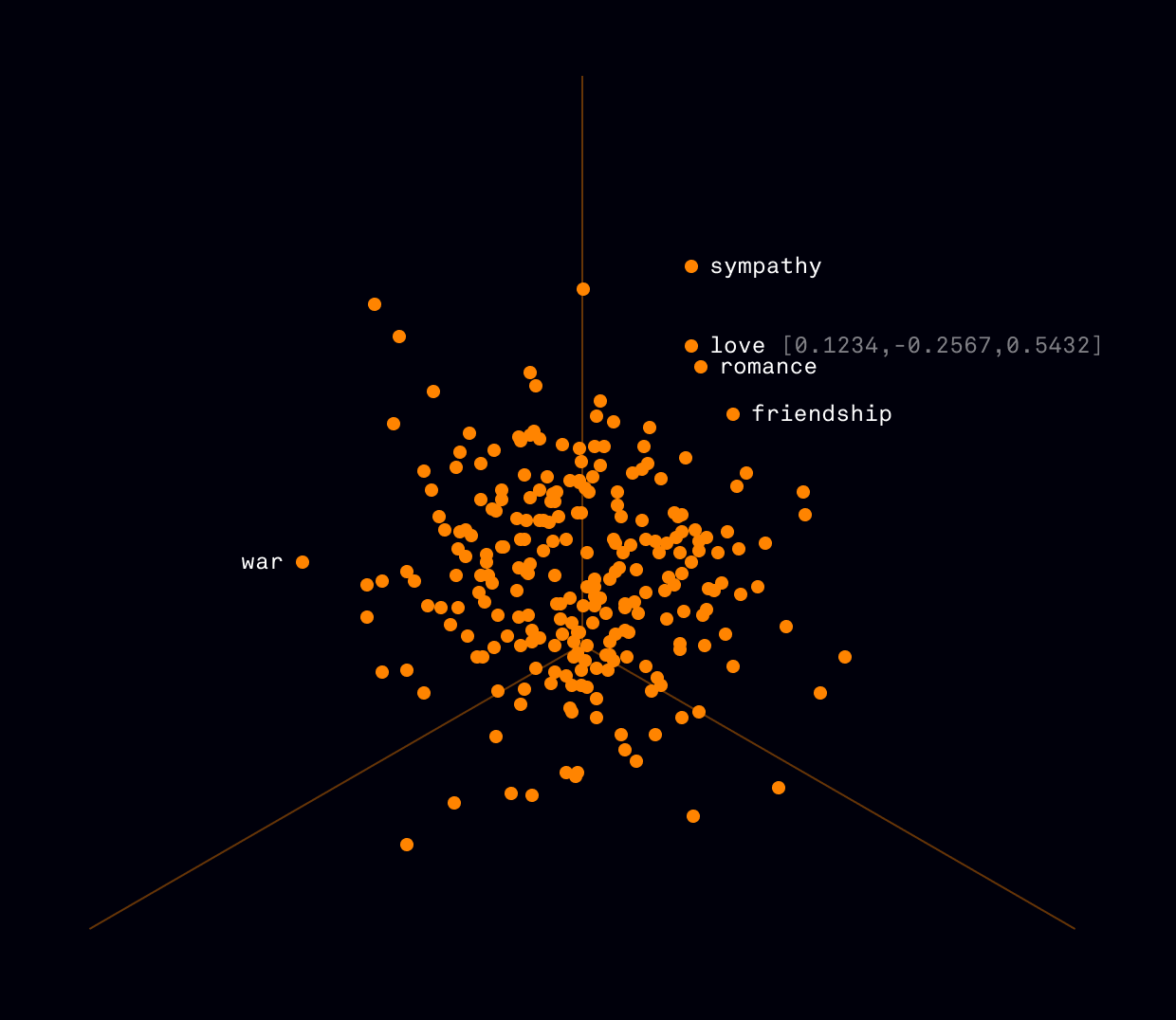

1,536 dimensions make up a very large list of numbers. If we placed one here, you would likely leave the post out of boredom from scrolling. So, for a more manageable example, let's show the 3-dimensional vector representation of the word “love”:

[ 0.1234, -0.2567, 0.5432 ]Embeddings

Now that we have a clearer understanding of vectors, it's time to see how they are stored in a vector database. The way this is done is by embedding them as points in a multidimensional space.

The number of dimensions in the space is determined by the dimensionality of the stored vectors. Personally, imagining points distributed in 768, 1,536, or 3,072 dimensions is a bit challenging. But maybe you’ve watched Interstellar dozens of times and feel capable of doing it. 😅

If not, let's return to our previous example. Since we represented “love” with a vector of just three dimensions, it will be placed alongside other embeddings as a point on an XYZ coordinate axis. That makes it easier to visualize, right?

Queries

Now that we know how data is stored in a vector database, let's see how queries are performed.

First, the query is converted into an embedding that can be compared with the rest of the vectors in the database.

Since embeddings are numerical representations, the distance between them can be measured using different mathematical metrics. The most common ones include:

- Euclidean distance ( √Σ(xᵢ - yᵢ)² ): measures the geometric distance in a multidimensional space.

- Cosine similarity ( cosθ ): measures the angle between two vectors, indicating how similar they are in direction.

- Manhattan distance ( Σ |xᵢ - yᵢ| ): calculates the sum of the absolute differences between each coordinate.

Vector databases use algorithms such as k-NN (k-Nearest Neighbors) and ANN (Approximate Nearest Neighbors), which leverage these metrics to return the vectors closest to the query.

This method allows for efficient searches across large datasets since it is not necessary to analyze the internal content of each vector—only their positions within the multidimensional space need to be compared.

While efficiency in queries is crucial, the fundamental characteristic of vector databases is their ability to find the vectors closest to the query, a process known as similarity search.

Importance of Similarity Search

But why is similarity search so important? To fully grasp this, we need to go back to the beginning: the transformation of data into vectors, also known as embedding.

The entity responsible for generating embeddings is not the database itself but a specialized language model called an embedding model. These models do not merely translate data into numbers; they encapsulate their semantics and context.

And here lies the key to understanding the importance of this type of search. Similarity searches not only identify elements that are numerically close but also capture semantic similarities, enabling LLMs to recognize deep relationships within stored data.

In short, vector databases not only facilitate access to information but also enhance LLMs’ ability to interpret and leverage available knowledge. What Stephanie would have given to have one in 1986!